Found this helpful? Share it with peers.

Introduction: Responsible AI Starts with Solid GRC Foundations

Artificial Intelligence (AI) has the power to revolutionize business, but without structure, it can expose companies to new ethical, regulatory and operational risks. Researchers and practitioners alike emphasize that AI is changing more than just technology – it’s changing risks themselves.

From data privacy risks to unclear decision-making and fast-evolving regulations, AI is challenging the very foundation of how organizations operate and govern themselves. And that’s where GRC comes in.

Before you can scale AI responsibly, you need a framework of trust: strong data governance, mature risk management, and enforceable compliance controls. These are the foundations that make AI not only powerful, but also safe, strategic, and sustainable.

In this blog, we explore what “AI readiness” really means from a GRC perspective, and how platforms like ADOGRC help companies move from vision to execution.

Why GRC Is Essential for Responsible AI

From automating routine tasks to guiding high-stakes decisions, AI is shaping both business processes and the risks tied to them, and GRC sits at the centre of this shift. This growing influence brings a range of challenges that GRC teams must address to keep AI both effective and accountable.

- AI multiplies risks in all areas. It processes vast and sensitive datasets, including personal, financial, and operational information. This raises significant concerns about privacy, security and regulatory compliance, especially when governance practices are weak. GRC must track data origin, ensure explainability, and maintain clear control structures.

- AI can introduce bias, make opaque decisions and conflict with ethical standards. It also increases regulatory complexity, as laws and guidelines struggle to keep pace with evolving AI capabilities.

- Without structured oversight, AI becomes a black box. Without human validation and transparency, it can lead to decisions that cannot be explained or verified.

These realities make GRC essential not only for risk management, but also for the secure and strategic use of AI.

The 3 Pillars of AI Readiness in GRC

Readiness for AI must be built on a solid GRC foundation. Governance, risk and compliance are the core pillars that enable responsible and scalable use of AI across the entire company.

3 pillars of AI readiness in GRC

1. Data Governance & Protection

Why it matters: AI systems are only as good as the data that powers them. Poor data governance can lead to biased outputs, privacy violations, or decisions that lack accountability.

With ADOGRC, you can:

- Define roles, responsibilities, and escalation paths for AI initiatives

- Map sensitive data assets to relevant processes and controls

- Run structured risk assessments on AI data access and usage

- Embed ethical and ESG considerations into approval workflows through your centralized compliance library

- Monitor how AI aligns with regulatory and strategic goals via dashboards and integrated workflows

Next: Enable continuous monitoring of data integrity, retention, and privacy risks across AI systems – surfacing gaps, conflicts, or ethical concerns before deployment.

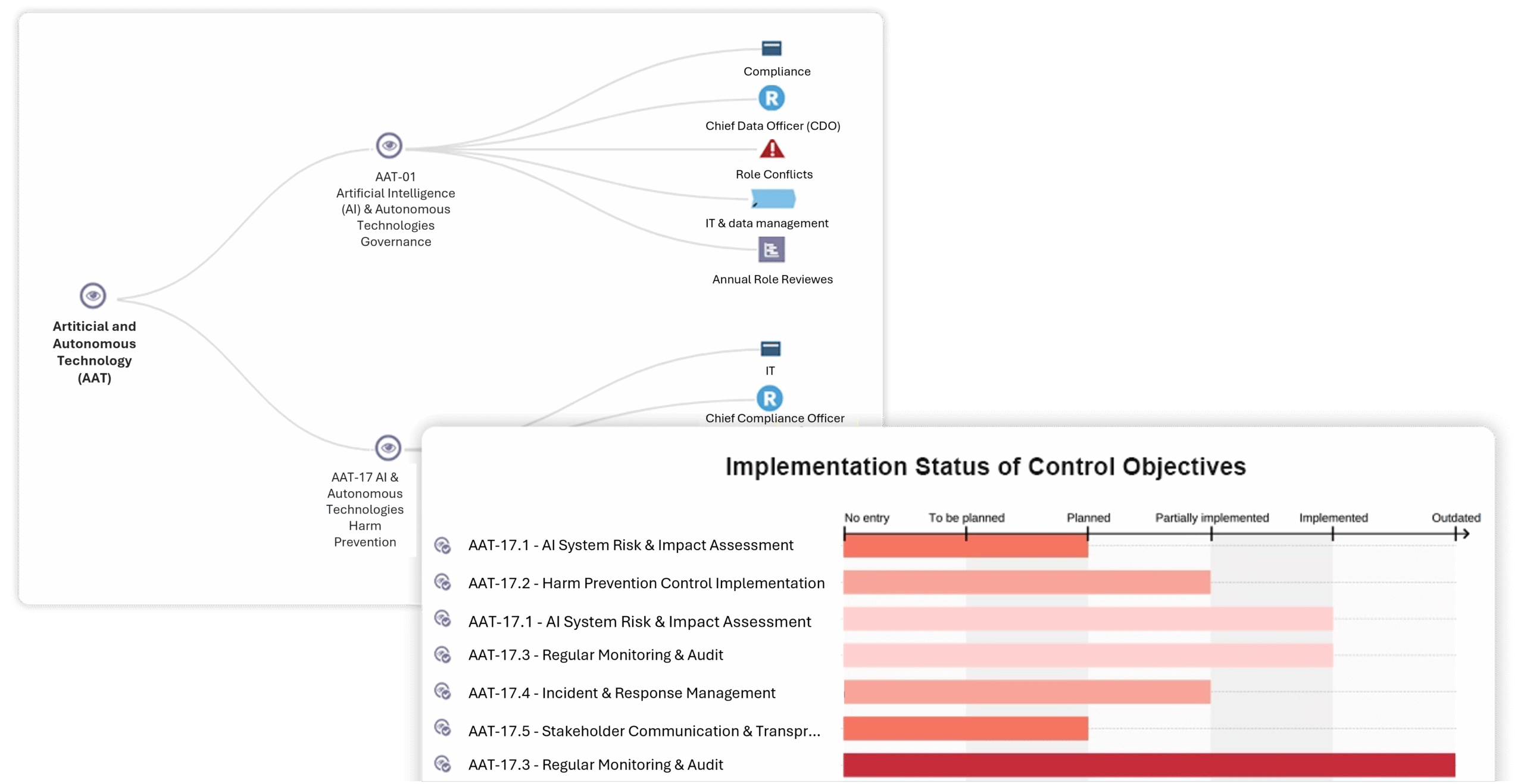

2. Risk Management & Internal Controls

Why it matters: AI introduces new risk categories, from model bias and deviation to opaque decision-making processes and regulatory uncertainty. Without tailored controls, these risks can quickly escalate.

With ADOGRC, you can:

- Identify and categorize AI-specific risks across all areas

- Link AI models and systems to your risk register

- Assign AI use cases to risk and control inventories along your processes

- Implement controls for transparency, explainability and model validation

- Map AI components to existing tailored inventories for transparency and ensure full control over all GRC objects

- Manage mitigation workflows, track the effectiveness, and monitoring routines and incident response

Anchoring AI Act requirements into your operations

Next: Integrate predictive risk scoring for AI-linked assets and automate detection of control gaps.

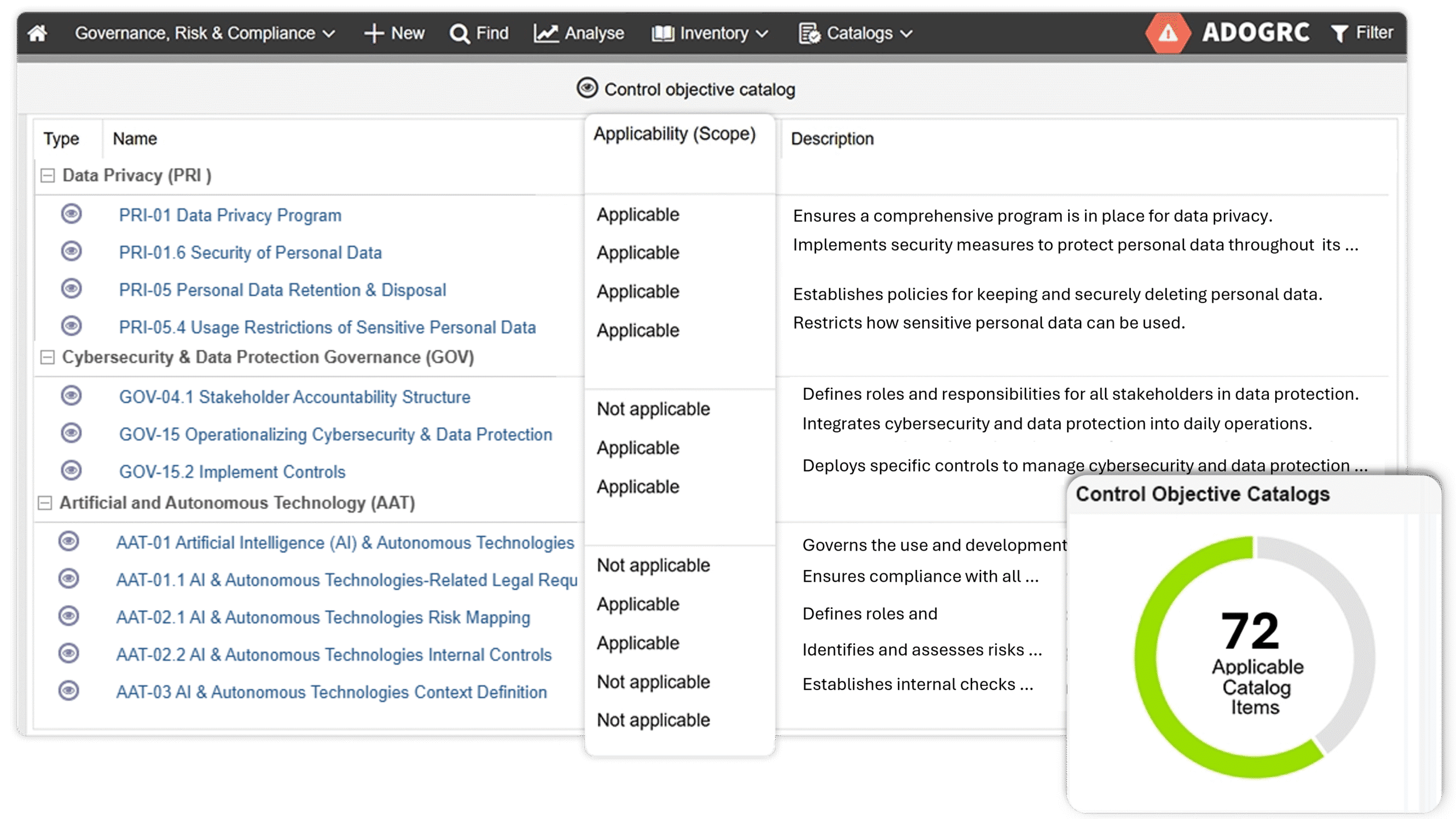

3. Compliance and Regulatory Alignment

Why it matters: New regulations like the EU AI Act will demand structured classification, documentation, and auditability of AI systems across development and usage.

With ADOGRC, you can:

- Align AI development with standards such as ISO/IEC 42001 and NIST AI RMF centralized in the compliance library

- Maintain a risk assessment inventory of AI specific risks and their assessments for corrective measures

- Link compliance requirements to controls, approvals and documentation

- Monitor AI-specific risks and its measures in a centralized dashboard and reports for audit readiness

Tailored scope of AI Act requirements

Next: Create dynamic compliance dashboards and automatically generate disclosures, reducing manual effort and keeping you ahead of regulators and stakeholders.

Hint: Think of these pillars as your AI safety net — they catch compliance gaps, mitigate risks, and prove trustworthiness to regulators.

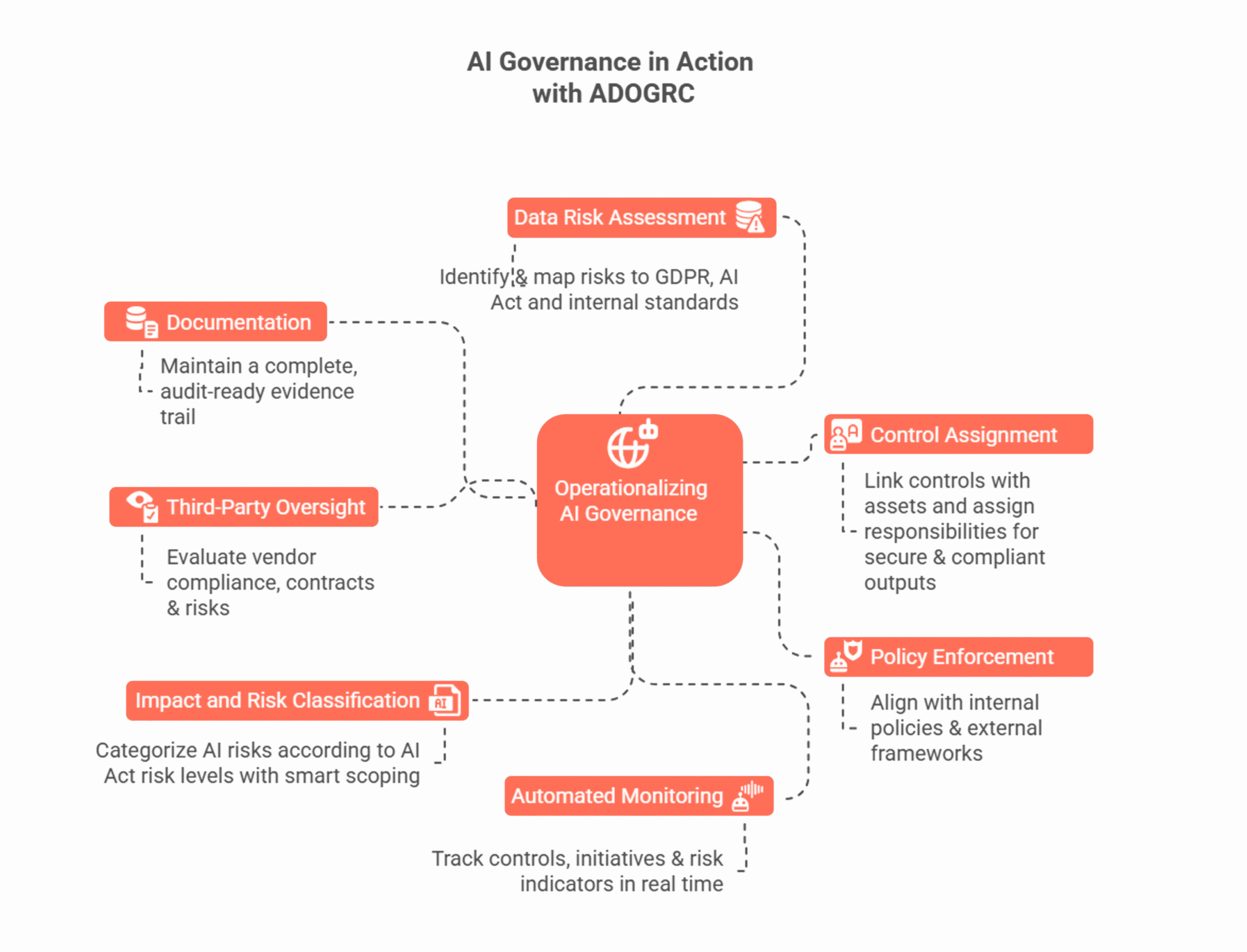

From Principles to Practice: Operationalizing AI Governance

Many companies have AI principles, but only a few have operating models that create the conditions for responsible and, above all, holistic implementation.

ADOGRC closes this gap by transforming compliance frameworks into enforceable workflows, role-based tasks and workflow-based controls. GRC thus becomes not just a guideline, but a practical management approach that prepares companies for AI.

ADOGRC connects vision with execution by:

Defining accountability across compliance, risk, security, and IT

Map ownership directly to roles, systems, and processes, ensuring full traceability and zero ambiguity.

Turning policies into actions

Convert high-level principles into operational tasks, workflows, and controls that are trackable and enforceable from day one.

Monitoring readiness in real time

Use dashboards and indicators to maintain visibility over compliance status, risk exposure, and implementation progress continuously rather than retroactively.

Centralizing everything that matters

Maintain one structured source of truth for AI-related obligations, aligned with frameworks such as the AI Act, GDPR, and ISO.

Use Case Spotlight: Governing AI in Customer Support

Imagine your customer support team is piloting a generative AI assistant to optimize ticket processing. How do you ensure that this innovation doesn’t backfire?

With ADOGRC, you can:

1. Assess data risk

Evaluate risks to personal customer data with structured Requirement Assessments and map them directly to GDPR obligations, AI Act requirements, and internal data security standards, ensuring the AI assistant doesn’t expose sensitive customer information.

2. Assign controls

Use Compliance Anchoring to assign controls, including a direct link to the responsible persons. By doing so, you ensure that critical initiatives – such as a model retraining, human oversight, and data security – are systematically managed to keep AI-generated results compliant, secure, and relevant over time.

3. Enforce policies

Connect the use case to internal AI governance policies and external frameworks such as ISO 42001 from the compliance library, keeping the AI assistant aligned with company rules and legal standards.

4. Monitor continuously

Use dashboards and reports to track the effectiveness of controls, open actions, and risk indicators in real time before they escalate.

5. Classify the risk

Classify the AI assistant according to the risk levels of the AI Act and filter applicable obligations with smarter scoping, focusing only on the requirements that really matter, all in your tailored compliance library.

6. Vet third parties

If the AI model is sourced externally, verify compliance with regulations, contracts, and risk standards to keep your AI assistant’s supply chain trustworthy. With ADOGRC’s third-party risk management, you can analyze key supplier data, from identity and cost to business impact, in one view.

7. Document everything

Centrally log every action, approval, assessment, and change, making it easy to prove the AI assistant is governed, secure, and audit-ready.

How ADOGRC puts AI Governance into practice

Conclusion: Responsible AI Requires Responsible GRC

AI readiness is about more than technology. It’s about structure, oversight, and trust.

With strong GRC practices in place, you ensure that:

-

AI risks are identified and addressed early

-

Policies are consistently applied and enforced

-

Decisions made by AI can be explained, audited, and improved over time

Platforms like ADOGRC turn these principles into reality, connecting strategic vision to day-to-day execution. From risk registers to real-time dashboards, from central compliance frameworks to automated workflows, ADOGRC operationalizes AI governance so that trust, accountability, and compliance are built in from day one.

AI will reshape your business. GRC will decide whether that transformation is sustainable, ethical, and ready for whatever comes next. Now is the time to make your organization AI-ready – on your terms.