Found this helpful? Share it with peers.

Why AI Needs Enterprise Architecture Data

Reliable information. Better decisions. Only with BOC Products.

This guiding principle also applies to the age of AI. Large language models and AI copilots are powerful, but to truly support better business decisions, they need reliable, structured, and context-rich enterprise data.

The BOC products deliver exactly that, and ADOIT, as the dedicated enterprise architecture repository, plays a key role. ADOIT captures capabilities, applications, and business processes in a trusted single source of truth.

Connecting this EA knowledge base to AI systems makes the whole process even better. And the best part – no more custom integrations or complex middleware. In this blog post, we'd like to introduce ADOIT's built-in Model Context Protocol (MCP) server.

What Is the Model Context Protocol (MCP)?

The Model Context Protocol (MCP) is an emerging standard designed to connect external data sources and tools with AI systems. It defines two main roles:

- MCP server: Provides tools and exposes data through a standardized interface.

- MCP client: Consumes these tools within an AI ecosystem, such as an LLM-based assistant.

By standardizing these interactions, MCP makes it simple to integrate enterprise architecture data into AI environments.

ADOIT and Its MCP Server: A Seamless Bridge

With the ADOIT MCP server, organizations can connect EA data directly to AI systems and LLMs. This allows AI assistants to query the data through MCP tools, turning any ADOIT REST API endpoint into an accessible resource. The integration requires no custom development, as it operates entirely through MCP, creating a seamless bridge between enterprise architecture and the AI ecosystem.

How the ADOIT MCP Server Works

The process is designed to be simple yet powerful:

1. ADOIT Administrators define MCP tools. Each tool includes:

- A natural language description (e.g., "Search for application components by name").

- A REST call with placeholders, following the ADOIT REST API structure. For example: https://:/ADOIT/rest/4.0/repos?name=

- Instructions for how placeholders should be filled – typically by extracting values (like the application name) from the user's prompt.

2. Tool Registration

- Tools are registered in the AI ecosystem so that LLMs know how to use them.

- Registration includes tool names, inputs, and outputs.

3. Tool Execution

- When a user prompt triggers a tool, the LLM extracts the placeholder values, substitutes them into the REST call, and executes it against ADOIT.

- Structured results are returned via the ADOIT MCP server and used in the AI conversation.

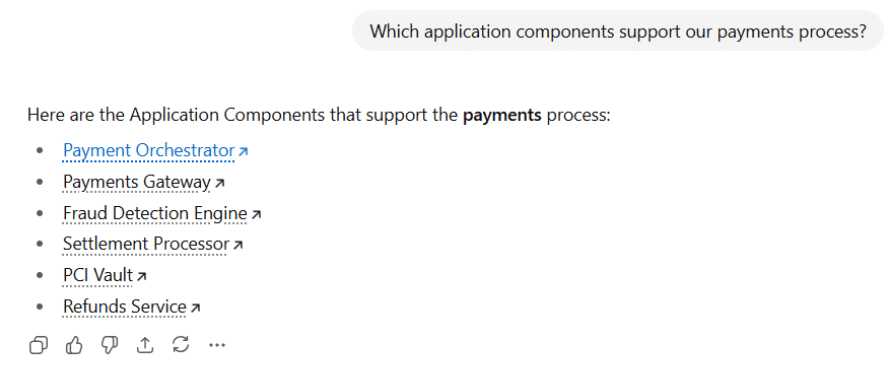

Example Use Case: Application Search in LLM Chat

One of the best-practice MCP tools included in ADOIT is Application Search.

Imagine a project manager asking their AI assistant:

"Which application components support our payments process?"

The assistant calls the Application Search tool, substitutes "payments" into the REST call, and queries ADOIT. The ADOIT MCP server returns the relevant application components together with their properties, which the LLM then incorporates into its response.

Here's an example of how this could look in practice:

AI assistant powered by the ADOIT MCP server returning Application Components that support the payments process.

This demonstrates how enterprise architecture data can enrich LLM conversations and support faster, more informed decision-making.

Why the ADOIT MCP Server Matters for Organizations

The ADOIT MCP server delivers value across multiple dimensions. Enterprise architects benefit from eliminating manual lookups and reporting, as AI can now access EA data directly. AI initiatives gain from the availability of structured and high-quality enterprise knowledge, which enhances the accuracy of their outputs. Administrators can rely on ready-made best-practice tools while also configuring custom ones for any REST endpoint, ensuring flexibility and control in managing integrations.

Getting Started with the ADOIT MCP Server

Unlocking the benefits is simple:

- Activating the ADOIT MCP server opens EA data for AI use.

- Best-practice MCP tools (such as Application Search) are included and can be directly registered in the AI ecosystem.

- Custom tools can be created by mapping natural language queries to REST calls, giving organizations full control over what knowledge is exposed.

With ready-to-use tools and flexible customization, ADOIT makes AI and enterprise architecture integration effortless.

Conclusion: EA Meets AI with ADOIT

With its integrated MCP server, ADOIT brings enterprise architecture data directly into AI ecosystems. Instead of being locked in specialist tools, EA knowledge becomes accessible in everyday LLM interactions – from quick queries to strategic decision support.

The BOC products deliver reliable information for better decisions. With ADOIT, that same principle now extends to the world of AI. We hope you'll enjoy it.