Found this helpful? Share it with peers.

Introduction

In many organisations, Business Process Management (BPM) has quietly become a paradox. It is widely adopted, deeply embedded in transformation initiatives, and increasingly expected to support compliance, automation, and performance – yet it is still often treated as a documentation exercise rather than a strategic capability.

As regulatory pressure increases, AI adoption accelerates, and operational complexity grows, this gap is becoming harder to ignore. BPM is no longer expected to simply describe how work should happen. It is expected to hold organisations together as change becomes continuous.

This shift is also reflected in our 2025 BPM study, which draws on insights from nearly 300 organisations across a wide range of industries. The results show that while BPM adoption is widespread, maturity remains uneven and largely stagnant. Most organisations continue to operate at early to mid levels of BPM maturity, with little movement between 2023 and 2025 – and only around 15% have reached a more advanced level.

In other words: expectations of BPM are rising faster than BPM maturity.

For 2026, the real question is no longer whether organisations need BPM, but what they now expect BPM to deliver. The trends below trace how BPM is evolving to meet those expectations, from driving real adoption and usability, to embedding evidence and governance, and ultimately becoming the foundation for automation and transformation.

Trend 1: Process Experience Drives Adoption

As expectations of BPM increase, one challenge surfaces immediately: processes only deliver value if people actually use them. No matter how mature the models or frameworks behind them are, BPM cannot fulfil its role as operational infrastructure without broad, consistent adoption.

In 2026, the differentiator is therefore no longer whether organisations have process models, but whether employees can easily access and apply process knowledge in their daily work.

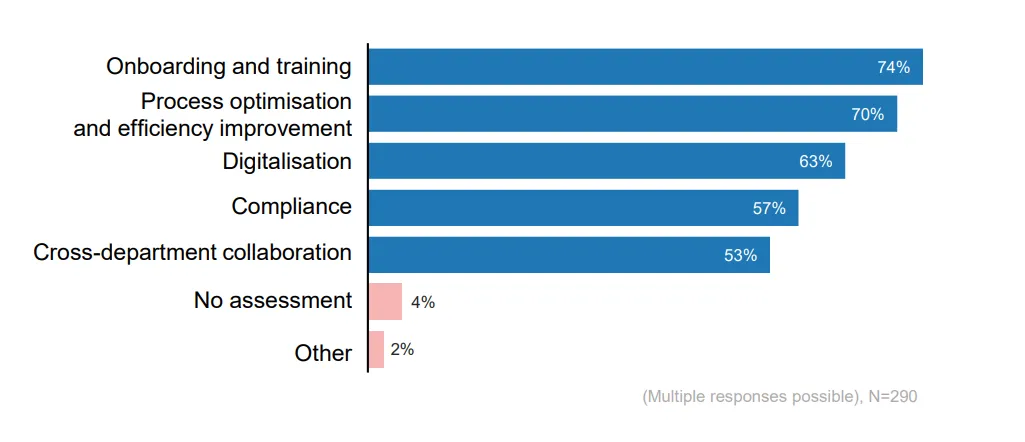

This is where BPM already proves its value today. The BPM Study shows that process documentation delivers its strongest impact in onboarding and training (74%), process optimisation (70%), digitalisation (63%), compliance (57%), and cross-department collaboration (53%). What these use cases have in common is not modelling sophistication, but usability: processes must be easy to find, easy to understand, and relevant to specific roles and tasks.

For ADONIS audiences, this aligns with how the Process Portal is positioned: making process knowledge an everyday asset through search, navigation via the process landscape, and role- or department-oriented access.

What changes in 2026:

-

Publishing becomes a product discipline, with clear ownership, structure, and quality standards

-

The process portal becomes the front door for “released truth”, not a static library

-

Adoption is managed with the same seriousness as model quality

To illustrate this, imagine a new HR team member looking for the correct onboarding flow for a regulated location. Instead of navigating shared folders or intranet pages, they access a single, released process in the portal, with role-specific entry points and embedded work instructions aligned with the approved workflow. Questions are resolved consistently, based on a shared understanding of how the process is meant to run.

Once adoption is in place, the next lever is speed – and that is where AI enters the picture.

Trend 2: AI as a BPM Co-Pilot

AI in BPM is moving from experimentation to repeatable productivity.

The BPM Study shows that AI adoption is already material: 43% of organisations actively use large language models, and another 23% are evaluating AI-capable tools. At the same time, usage remains largely local and supportive, rather than embedded across the BPM lifecycle.

This reflects where many organisations currently stand: AI is applied where it feels low-risk, while broader integration is held back by unclear ownership, inconsistent data, and missing governance structures.

Why the shift starts now

In 2026, this begins to change, not because AI suddenly becomes more capable, but because organisations are under pressure to move beyond isolated pilots.

Analyst research supports this transition point. Gartner predicts that many generative AI initiatives will be abandoned after proof-of-concept stages due to cost, complexity, weak data foundations, and unclear governance. At the same time, Gartner highlights the growing relevance of AI-ready data and agentic AI, signalling that scaling AI requires stronger operational foundations than experimentation alone can provide.

In parallel, research from Deloitte and McKinsey & Company shows that organisations are increasingly prioritising AI use cases with measurable productivity impact in core operations, particularly in documentation, analysis, coordination, and decision support. These areas align closely with BPM activities.

For BPM, this marks a shift from AI as a supporting tool to AI as a co-pilot: embedded into governed processes to accelerate understanding and change, without replacing accountability. Importantly, this does not mean organisations are moving toward fully autonomous process execution. Analyst research suggests the opposite: AI initiatives that bypass governance, reliable data, and operational ownership tend to stall or be abandoned – reinforcing BPM as the structuring layer that makes AI scalable, auditable, and trustworthy in practice.

What AI supports in BPM

In 2026, AI increasingly supports everyday BPM work where time is most often lost:

-

Drafting and updating process documentation

-

Summarising complex process context

-

Classifying responsibilities, controls, and dependencies

-

Accelerating understanding for non-experts

Rather than replacing BPM expertise, AI reduces friction, enabling more people to work effectively with process knowledge.

In ADONIS, this is reflected in how the AI Assistant supports different BPM tasks, from contextual assistance during process exploration to deeper support in design and documentation.

Governance and data readiness as the limiting factors

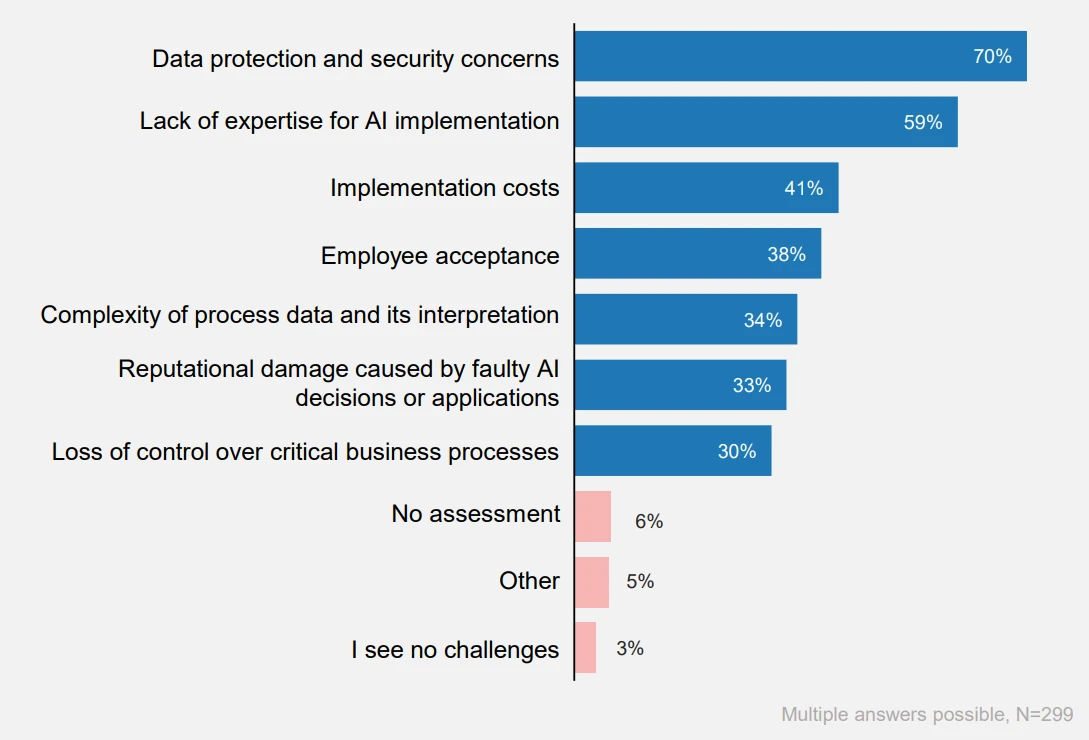

The main constraint to scaling AI in BPM is no longer technical capability, but governance readiness. The BPM Study highlights persistent barriers such as data protection and security concerns (70%), lack of expertise (59%), costs (41%), and limited acceptance (38%), alongside perceived risks including reputational damage from faulty or biased decisions (33%) and loss of control over critical processes (30%).

For BPM, the implication is direct: AI outputs must remain proposals until they are reviewed, approved, and released through defined roles and workflows. This preserves BPM as an authoritative source of truth, while still allowing AI to accelerate analysis and change.

Reliable data is a prerequisite. Analyst research consistently links AI success to AI-ready data – and for BPM this translates into a concrete requirement: a well-groomed, up-to-date, released process portfolio. Without this foundation, organisations remain stuck in local AI use cases and struggle to progress toward broader application.

A brief note on MCP

Model Context Protocol (MCP) plays a supporting role by standardising how LLM-based applications connect to tools and data sources. This reduces one-off integrations and enables more scalable AI patterns, but only when paired with clear process ownership, release standards, and governance boundaries provided by BPM.

Suggestions for implementation

-

Restrict AI interaction to approved, released process content

-

Standardise common question and usage patterns by role

-

Define clear AI output handling rules: proposal, review, approval, and traceable change

-

Establish AI-ready process knowledge through ownership, freshness routines, and enforced release standards

AI increases speed. Governance and data quality determine whether that speed translates into sustainable value.

Trend 3: Process Mining Becomes the Evidence Standard

As BPM matures, intuition-first redesign is no longer sufficient. In 2026, improvement initiatives increasingly start with execution-level evidence, not assumptions.

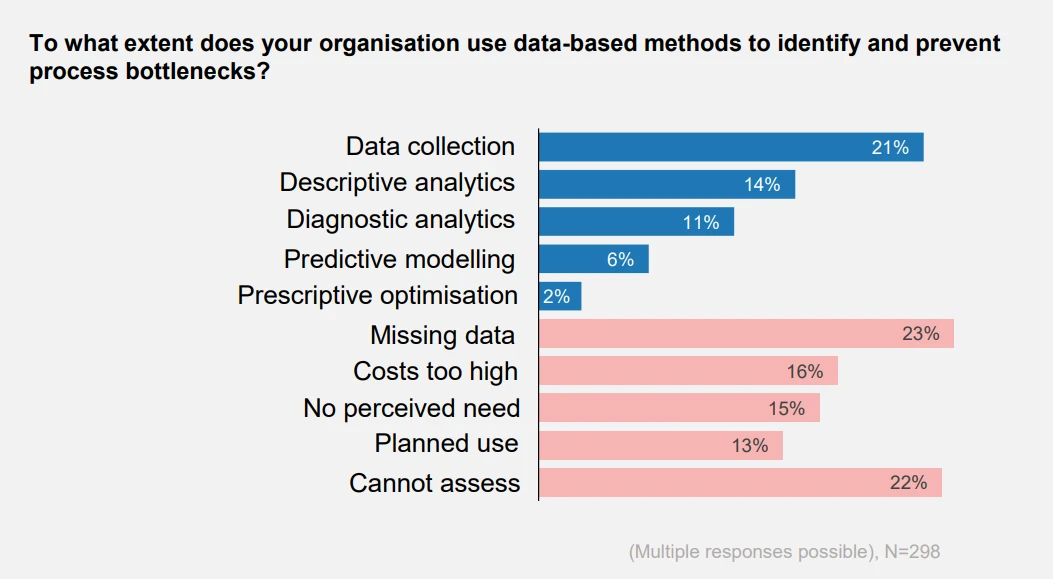

Process mining is not yet the default in most organisations, but it is clearly moving toward an evidence baseline for high-impact processes where deviation costs are high, and change decisions carry risk. The BPM Study shows that many organisations still operate at early analytics maturity: 21% collect raw data only, 14% rely on descriptive analytics, and just 11% use diagnostic approaches such as process mining. At the same time, intent is growing –13% plan to implement process mining.

In increasingly automated and interconnected environments, acting on incomplete or misleading insights is simply too costly.

External research reinforces this trajectory. Deloitte studies indicate that organisations are expanding process mining usage and increasingly combining it with AI to scale insight generation and root-cause analysis – driven by more digitised execution data, higher costs of variance, and growing pressure to justify improvement and automation decisions with measurable evidence.

Example:

A purchase-to-pay process appears well documented, yet late-payment fees persist. Process mining reveals that the root cause is not approval delays, but repeated rework triggered by missing master data and inconsistent invoice formats. The organisation fixes the standard at the point of failure, updates work instructions where the issue occurs, and stabilises execution – before introducing targeted automation for well-defined, repeatable steps.

This sequencing matters. Process mining does not automate processes – it provides the evidence needed to decide where automation is justified and safe.

For ADONIS audiences, this is the logic behind Process Mining Essentials: discovery, conformance checking, and performance analysis grounded in BPM context and governed improvement cycles.

Suggestions for implementation

-

Define a clear end-to-end baseline and intended execution pattern before analysis

-

Align event log requirements with BPM objects so insights map back to process knowledge

-

Translate mining insights into a governed improvement backlog, with owners and release decisions

Evidence reveals deviations. Governance determines which of them become the new standard.

Trend 4: Compliance Is Built into BPM

In 2026, compliance increasingly shifts from a reactive activity to a built-in property of how processes are designed, approved, and changed.

This direction is reinforced by the BPM Study, where compliance remains a major value driver for process documentation (57%). What changes in 2026 is how compliance is operationalised. Rather than relying on post-audit remediation, organisations reduce audit friction by embedding requirements, controls, and responsibilities directly into BPM – and enforcing them through governed release routines.

This evolution mirrors established compliance and control thinking. Standards such as ISO 37301 emphasise systematic, traceable compliance management, while frameworks like COSO stress the importance of translating policies and controls into operational procedures that guide day-to-day execution. BPM provides the missing operational layer that turns these principles into practice.

In effect, BPM and GRC converge operationally:

-

Processes define how work should happen and who is responsible

-

Controls and policies are traceable in process context

-

Evidence of execution and change is available on demand, supported by approvals, workflows, and audit trails

The result is not more documentation, but less manual compliance effort: clearer accountability, fewer uncontrolled local variants, and greater transparency around who approved what, when, and why.

Suggestions for implementation

-

Map compliance requirements to explicit process steps and responsibilities

-

Use auditable release workflows for approvals and changes

-

Establish change-impact routines to ensure policies and controls remain aligned as processes evolve

With compliance embedded into BPM, governance becomes continuous – not episodic.

Trend 5: Standardisation Enables Scale

By 2026, scaling BPM is no longer about increasing modelling capacity. It is about increasing reuse.

As long as processes are copied, adapted, and maintained locally, every change becomes a one-off – slowing improvement, increasing variance, and weakening downstream initiatives. Standardisation addresses this by establishing shared, governed baselines that can be improved once and applied consistently across units, regions, and systems.

Standardisation does not eliminate flexibility. It makes variation explicit and governed. Clear distinctions between global standards, justified variants, and local adaptations enable scale without losing control.

Process architecture plays a critical role here. Reference structures such as the APQC Process Classification Framework provide a common language for structuring process landscapes, reducing duplication, and enabling meaningful comparison.

Suggestions for implementation

-

Define which processes are global standards, which may vary, and under what conditions

-

Introduce variant governance rules covering approval, documentation, and maintenance

-

Use a consistent process taxonomy to eliminate near-identical processes and enable reuse across the landscape

Standardisation enables scale. Governance makes that scale manageable. Intelligence turns it into performance.

Trend 6: From Process Insight to Decision-Grade Intelligence

Process intelligence matters in 2026 when it supports decisions that hold up under scrutiny, not just dashboards.

Many organisations already collect process metrics, but insight becomes decision-grade only when it is linked to accountability and action. This requires moving beyond reporting toward a closed loop where insights inform changes, and changes are measured against outcomes.

The trajectory is pragmatic:

-

Strengthen descriptive and diagnostic insight first

-

Link insights to accountable roles and business KPIs

-

Extend into simulation where data quality and governance allow

Decision-grade insight reduces firefighting and improves prioritisation by making performance management tangible: teams can see what changed, who owns it, and whether it worked.

Suggestions for implementation

-

Define a minimal KPI set per critical process with clear ownership

-

Link insights directly to corrective actions and release decisions

When insight is actionable, BPM becomes the foundation for transformation.

Trend 7: BPM as the Backbone of Automation

Automation does not fix unclear processes – it scales them.

In 2026, BPM becomes the transformation backbone because it provides clarity, ownership, and traceability across increasingly fragmented automation landscapes. Successful automation depends on everything that comes before: usable processes, governed AI support, execution-level evidence, embedded compliance, and standardised baselines.

Where these foundations are missing, automation amplifies inconsistency and exceptions. Where they are in place, automation becomes repeatable, measurable, and sustainable.

Why BPM matters even more as automation evolves

Automation patterns are becoming more powerful and more autonomous. Analyst research, including Gartner, points to growing interest in AI-assisted and agent-based automation – while also showing that initiatives stall when governance, value definition, and operational control are weak.

The implication for BPM is straightforward: as automation capability increases, tolerance for ambiguity decreases.

Even advanced automation depends on BPM to provide:

-

Reliable context (released processes, responsibilities, rules)

-

Clear ownership and approval logic

-

Measurable and auditable outcomes

BPM remains the layer that ensures automation acts on what should happen, not just on what can be automated.

Automation that scales follows a disciplined logic

Successful automation initiatives consistently follow the same sequence:

-

Define and release the process baseline

-

Use evidence to identify improvement and automation candidates

-

Govern change through approvals

-

Measure outcomes against original objectives

This is also why many automation initiatives fail to deliver expected results. Teams often move too quickly from intention to implementation, automating workflows that are not yet stable, measurable, or well understood. Without a reliable process baseline and execution-level insight, assumptions get locked into code.

For a detailed walkthrough, see “Why Most Automation Fails – and How to Get It Right with ADONIS.”

Suggestions for implementation:

-

Require released baselines before automation proposals

-

Treat automation as a governed change, not a technical shortcut

-

Measure post-implementation outcomes and retire underperforming automations

2026 Is the Year BPM Becomes Operationally Intelligent

Business Process Management is evolving from a documentation discipline into a governing operational capability. In 2026, its value is defined not by whether processes exist, but by how effectively they are used, governed, improved, and scaled.

Organisations that succeed will execute BPM in the right sequence: adoption, acceleration, evidence, governance, scale, insight, and transformation.

How organisations respond to this shift will determine whether BPM becomes a stabilising force – or remains an underused asset. For those looking to take the next step, the focus now moves from understanding these trends to applying them in practice.

See how ADONIS supports this evolution, from process experience and AI assistance to mining, governance, and automation enablement.

References:

BPM Study 2025 (ZHAW School of Management and Law with BOC Group): key facts and study overview. www.boc-group.com

Gartner reports on AI-ready data, GenAI project abandonment, Agentic AI risk, and Hype Cycle framing. 1 2 3 4

Deloitte Global’s 2025 Predictions Report: Generative AI. Deloitte

McKinsey report on the state of AI in organizations. McKinsey & Company

Deloitte Global Process Mining Survey 2025. Deloitte

Gartner process mining / digital twin direction as cited by IBM. ibm.com

van der Aalst (process mining tutorial foundations, discovery and conformance concepts). vdaalst.rwth-aachen.de

ISO 37301 (Compliance management systems). APQC

COSO Internal Control – Integrated Framework (control activities embedded in operations). ISO

APQC Process Classification Framework (process architecture and common language). ISO

ISO 9001:2015 (performance evaluation and continual improvement principles). ISO

ADONIS documentation and knowledge hub pages (Process Portal, Release Workflows, Comments, Read & Explore state filtering, AI Assistant).Model Context Protocol (MCP) specification and overview. GitHub