Found this helpful? Share it with peers.

Introduction

Artificial intelligence (AI) has already found its place in companies. The opportunities are significant, but the risks continue to grow. Many organisations now face problems with intransparent decisions, rising complexity, data protection, and legal uncertainty.

The EU AI Act is the first law in Europe that regulates artificial intelligence. It came into force in July 2024. The main obligations will apply from August 2026 and cover all organisations that develop or use AI systems in the EU. In practice, that includes almost every company.

Take the Readiness Check now and see if your AI systems are affected by the EU AI Act.

Companies must ensure transparency in how data is handled and how AI is supervised. They also need to prove that their systems work safely and follow legal requirements.

This blog post explains how the EU AI Act can be turned into clear governance steps and how ADOGRC supports companies in building AI readiness.

Why GRC Is the Bridge to the AI Act

For organisations aiming to introduce AI responsibly, it is essential not to view AI systems in isolation, but within clearly defined structures. The AI Act makes it clear that responsible AI use is only possible when companies can manage and demonstrate risks in a transparent way.

This highlights why a solid GRC foundation is indispensable:

-

Structures for accountability and control that clearly define who is responsible for which decisions.

-

Procedures for risk assessment that identify biases, opaque decisions, or security gaps at an early stage.

-

Reliable documentation and evidence that can withstand scrutiny from auditors and stakeholders.

-

A basis for trust, ensuring that AI is not seen as a black box but as a traceable, explainable tool.

By translating the AI Act’s requirements into concrete processes, policies, controls, and measures, GRC plays a central role in ensuring compliance.

The Core Requirements of the EU AI Act

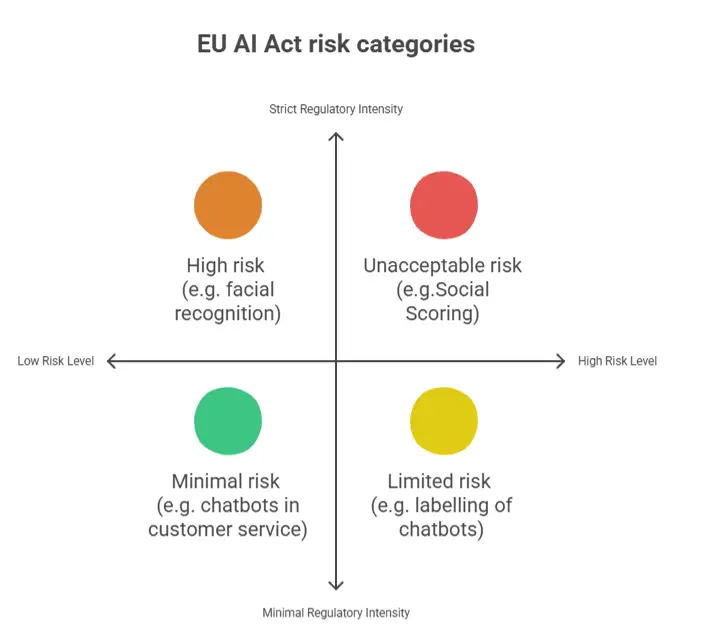

The EU AI Act Risk Categories

These systems come with extensive obligations:

-

Documentation and evidence covering data, training processes, and decision paths.

-

Transparency and explainability of models.

-

Human oversight to monitor and control critical decisions.

-

Auditability – ensuring systems can be reviewed and traced at any time.

Meeting these requirements is nearly impossible without structured governance in place.

According to Gartner® (2024), 57% of organisations lack the structures and control mechanisms needed to deploy AI safely. In other words, the majority are not yet AI-ready – which makes action an immediate priority.

GRC as an Enabler for AI Act Readiness

Responsible use of AI requires a strong foundation — one that GRC provides. Its three core dimensions directly translate into AI Act readiness:

1. Data governance and protection

- Ensure and document the origin and quality of training data. Organisations must be able to prove at any time where their data comes from and whether it is suitable for building reliable AI models.

- Align GDPR and AI Act requirements consistently. These must be harmonised with the AI Act’s principles of data quality and transparency.

2. Risk management and internal control

- Identify AI-specific risks such as bias, model failure, or incorrect decisions.

- Implement controls for transparency, model validation, and human oversight. A structured approach to supervision and monitoring ensures that decisions remain explainable and manageable.

3. Compliance and regulatory alignment

- Integrate AI Act requirements with standards such as ISO/IEC 42001 or the NIST AI RMF, embedded within existing compliance frameworks.

- Store all evidence, assessments, and measures centrally to ensure auditability and long-term traceability.

With a solid GRC foundation, organisations establish an integrated control system that makes AI deployment not only compliant but also scalable and reliable. By standardising processes, defining responsibilities, and managing risks systematically, organisations become AI-ready.

Hint: Read more about why AI readiness is rooted in GRC and how it forms the foundation for responsible and scalable AI.

From Principles to Processes: Operationalising the AI Act with ADOGRC

Many organisations have already defined their AI guidelines – yet often lack the operational models to enforce them effectively. This is where ADOGRC comes in:

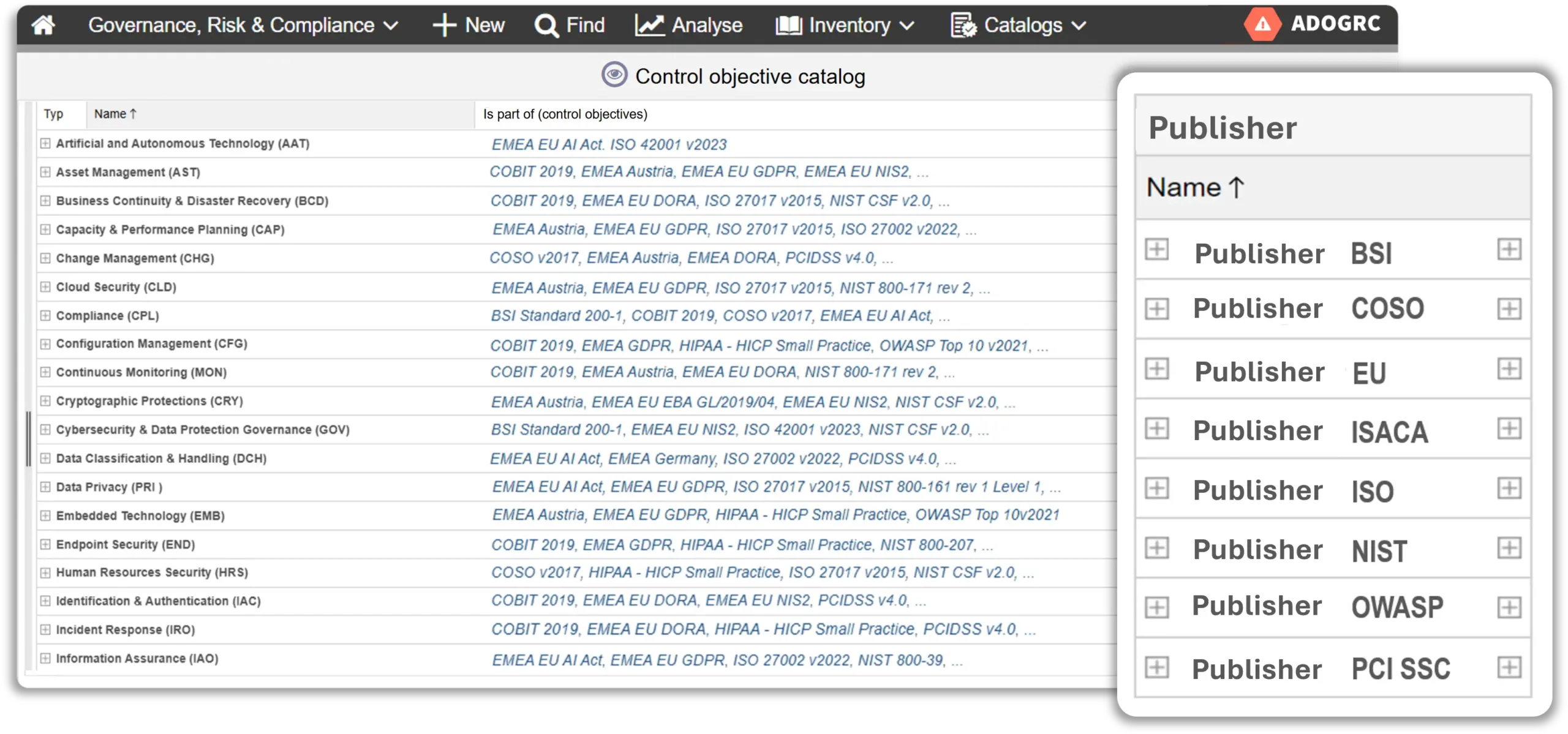

Central compliance library

All regulatory requirements – from the AI Act to industry-specific standards – are captured, maintained, and updated centrally within ADOGRC. This creates a single source of truth for all AI-related obligations.

ADOGRC’s Compliance Library

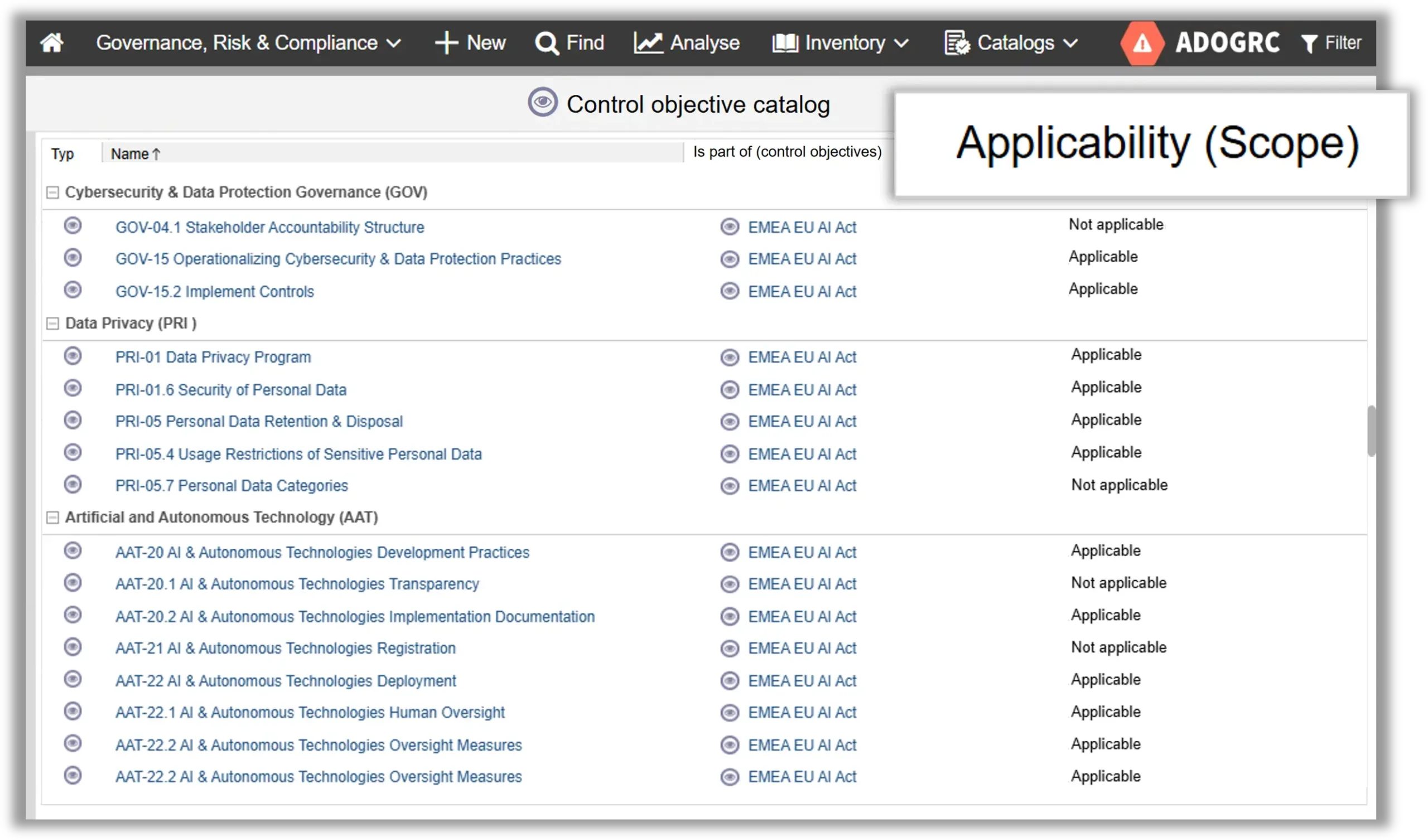

Scoping and relevance assessment

Relevant Specifications at a Glance

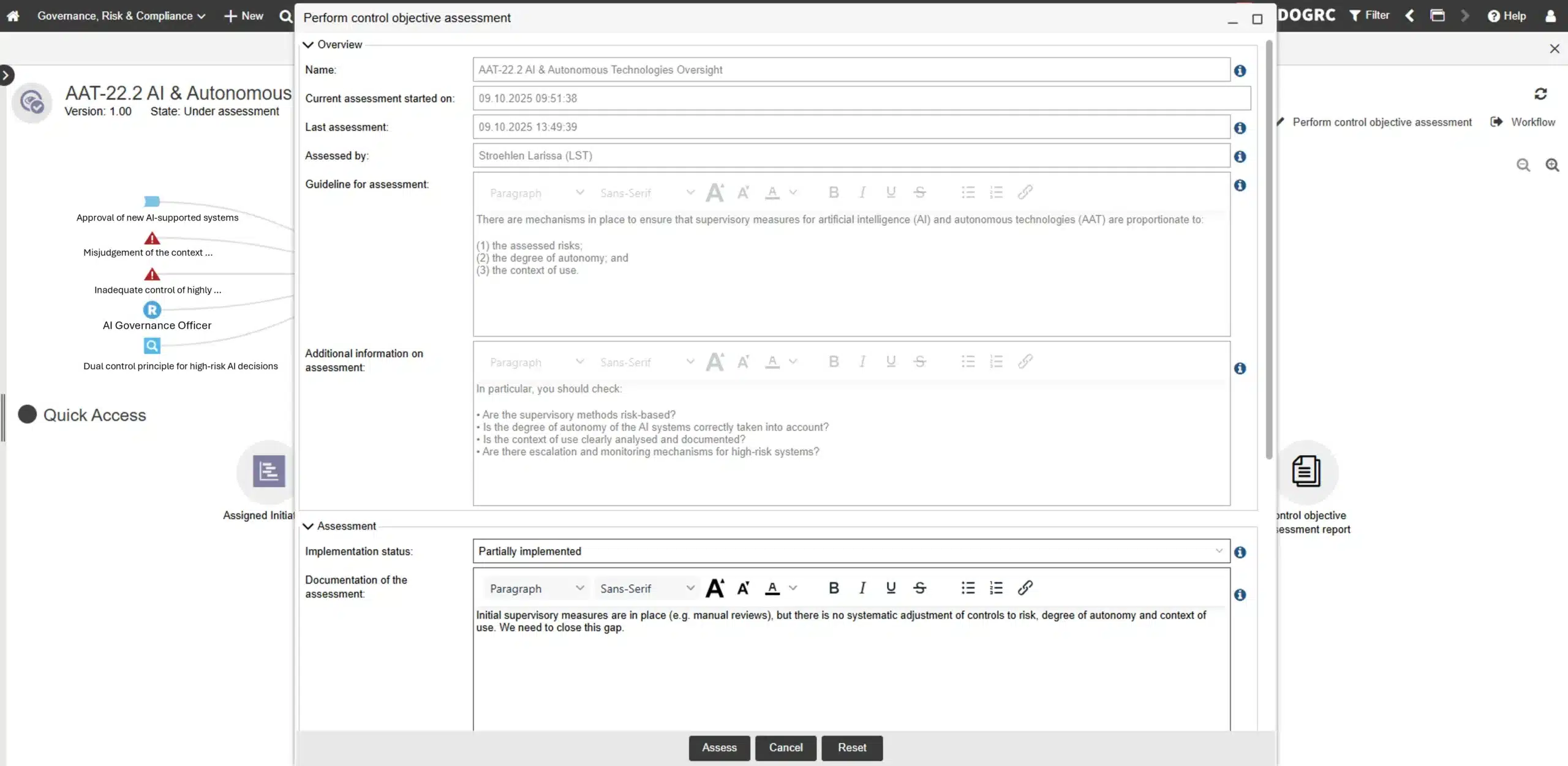

Audit-proof assessment

With ADOGRC’s standardised evaluations, you can verify whether the requirements of the AI Act are being met — documented, traceable, and audit-ready. Detailed views make gaps and areas for improvement immediately visible.

Detailed View of Requirement Assessment

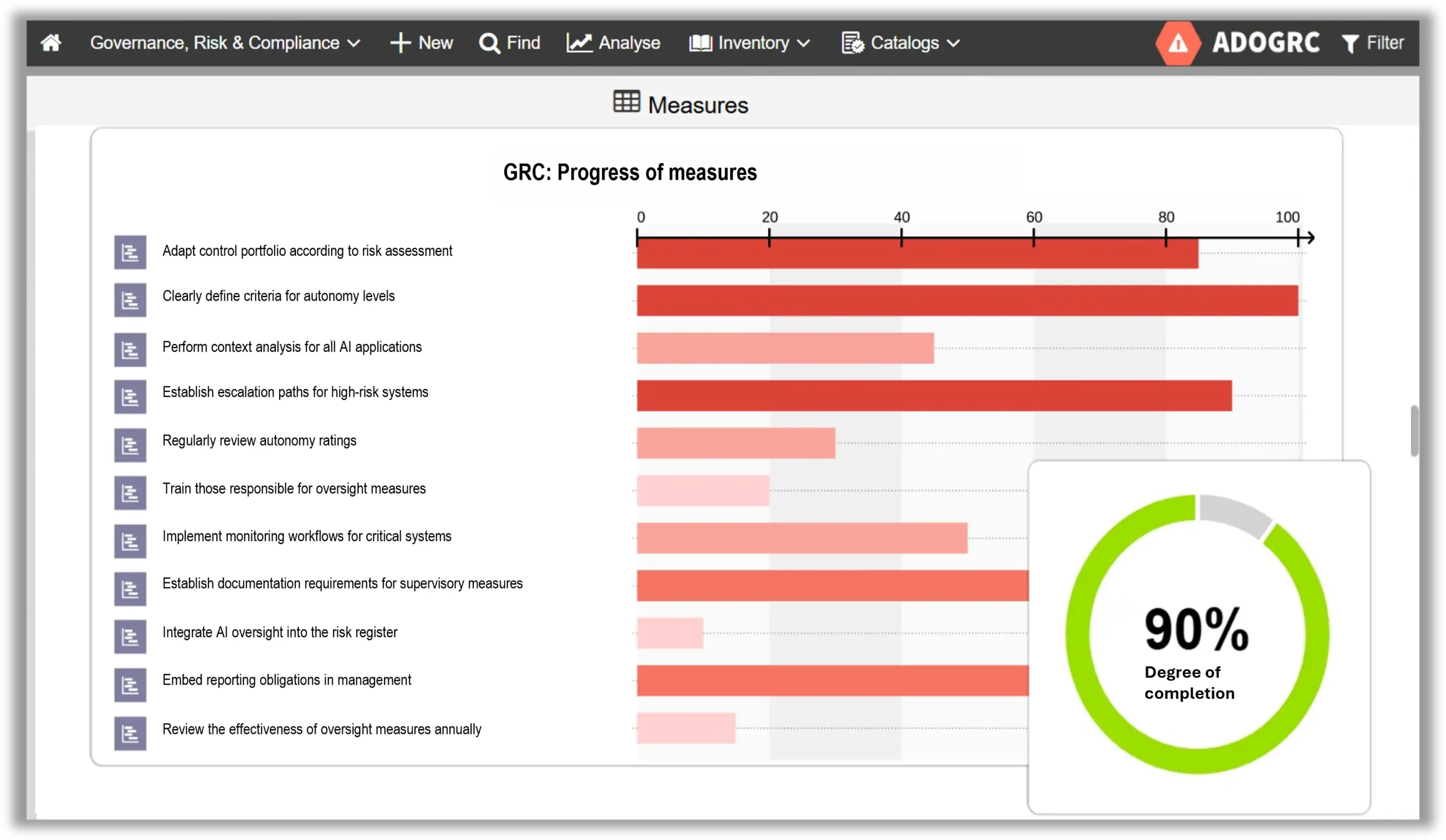

Action tracking

Based on the assessment of your requirements, you can define and manage actions directly in ADOGRC. The platform ensures, through automated workflows, that responsibilities are assigned, deadlines are set, and progress is documented transparently. This way, no step in the AI compliance process goes unnoticed.

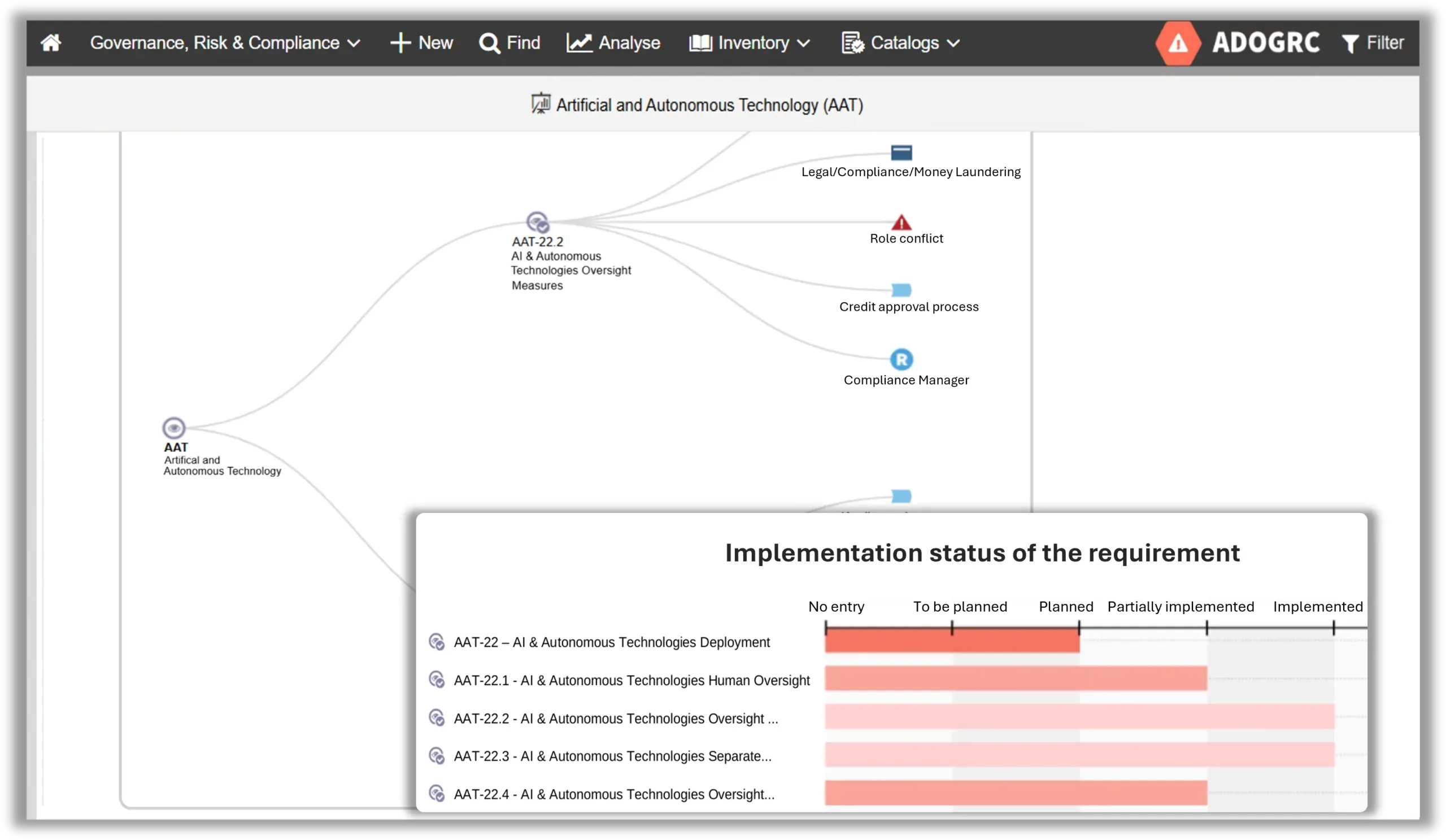

Compliance anchoring

With ADOGRC, you embed AI-related compliance directly into your processes. Policies, responsibilities, and control points are integrated into workflows, turning governance from a static document into an active part of daily practice.

Direct Linking of Policies, Responsibilities, and Controls to Processes

Use Case Spotlight: AI Act Compliance in Customer Service

Imagine a typical scenario: A company implements an AI assistant in customer service to process inquiries more efficiently. But how can it ensure that this use complies with the EU AI Act requirements? With ADOGRC, it becomes possible:

Classify

The AI assistant is categorised as limited risk under the AI Act, as transparency obligations apply — users must be informed that they are interacting with an AI system.

Assess risks

Based on the scoped requirements, the processed data is reviewed for GDPR compliance, and the AI assistant’s functionality is checked for alignment with the AI Act.

Define and document actions

In addition to controls, one-time actions are defined, such as updating the privacy policy or implementing a user information feature. All actions are documented and versioned centrally in ADOGRC, accessible at any time.

Ensure traceability

For internal audits or external reviews, ADOGRC provides transparent evidence of all implemented actions, controls, and responsibilities.

The result? With ADOGRC, innovation remains possible but without regulatory risk.

Conclusion: The AI Act as an Opportunity for Sustainable AI Governance

The EU AI Act makes it clear that artificial intelligence can only realise its full potential when managed responsibly. Organisations that integrate a strong GRC foundation holistically into their strategy can identify risks early, operationalise controls, and translate regulatory requirements into concrete actions.

AI is fundamentally changing how organisations operate. GRC determines whether this transformation happens under regulatory pressure or as a strategic move that strengthens the organisation in the long term.